This summer we invited 13 Cambridge, MA youth from diverse backgrounds to explore and learn the fundamentals of generative artificial intelligence or GenAI. This experience created space for youth to bring their identities and interests into creative computing in ways that allows them to see themselves in GenAI. They worked together and addressed questions such as: What is generative AI and where do we see it in the art world? How does working with AI tools affect creativity? Can AI teach us how to be more creative? What are the benefits and harms of GenAI?

This in-person, pre-college course came about from Lesley STEAM’s collaboration with Cambridge Youth Programs. CYP staff recruited the students and youth counselors who assisted our team. Every day for one week, from 9:30am – 5:30pm, CYP youth (students) met at Lesley University’s College of Art and Design or LA+D. The youth earned 2 Lesley college credits and received stipends for their participation. The skills they learned can be applied to other classes they can take such as art and computer science. Students can also explore GenAI applications for entrepreneurship opportunities.

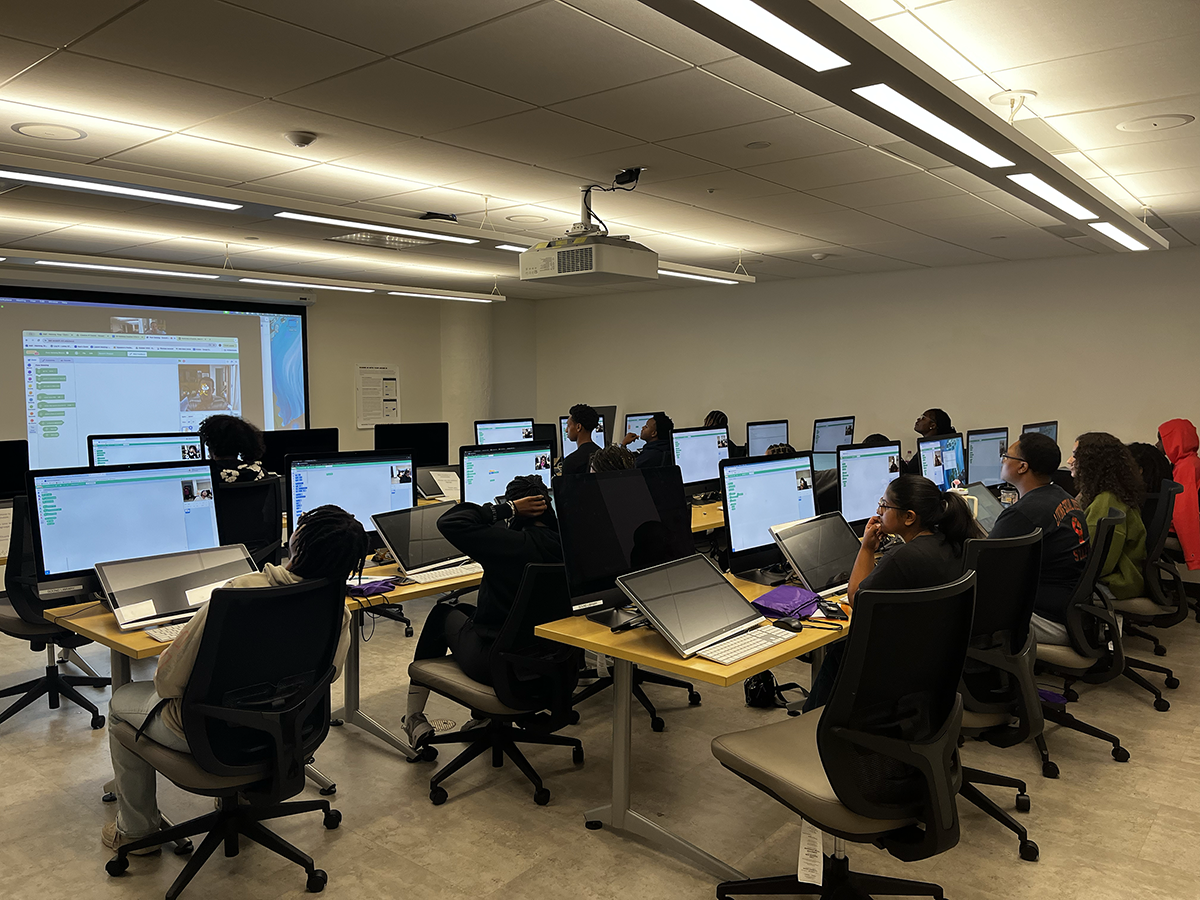

LSTEAM/CYP facilitated activities such as face-sensing AI using the Scratch programming language, GenAI design thinking, and participating in events using text, image and music GenAI tools. Class activities culminated in capstone “project pitches” and presentations. Main objectives of the class included:

- Learning the fundamentals of generative artificial intelligence or GenAI.

- Exploring design thinking for GenAI (empathize, define, ideate, prototype, test)

- Referencing, applying and combining artistic styles to generate new images, text, and music.

- Explore bias/anti-bias, copyright and intellectual property issues in GenAI.

We began the course by learning about GenAI that uses machine learning models to create new content that includes images, text, music and video/animation. A machine learning model is a program that finds patterns, or makes decisions from a previously unseen dataset. Facial recognition—identifying and measuring facial features in an image—is one of the more obvious applications of machine learning. Students watched and discussed the film Coded Bias that shows how many facial recognition technologies do not accurately detect certain faces such as darker-skinned women.

GenAI Art Tools & Daily Challenges

Throughout the course, students had access to and used several GenAI art tools including Adobe Firefly, Deep Dream Generator, ChatGPT, Udio, and PoseNet. Each day focused on a specific GenAI art tool, which uses a large language model or LLM that is used to train a neural network on massive amounts of data. We looked at several videos that explained what AI art is and how it came to be such as this one: AI Art, Explained. Daily challenges provided students with tasks to accomplish. Each morning we opened with a cypher (circle) to give students a chance to share their expectations, interests, and concerns. We also closed with a cypher to share our accomplishments and challenges for the day.

A cypher doesn’t need a stage or designated area in which to take place, they can, and do, form anywhere; at parties, in clubs, outside on the concrete, in train stations, on a beach, in someone’s living room. Spontaneous in its forming, all that [performers] need is space and they will cypher. —Emmanuel Adelekun, Red Bull

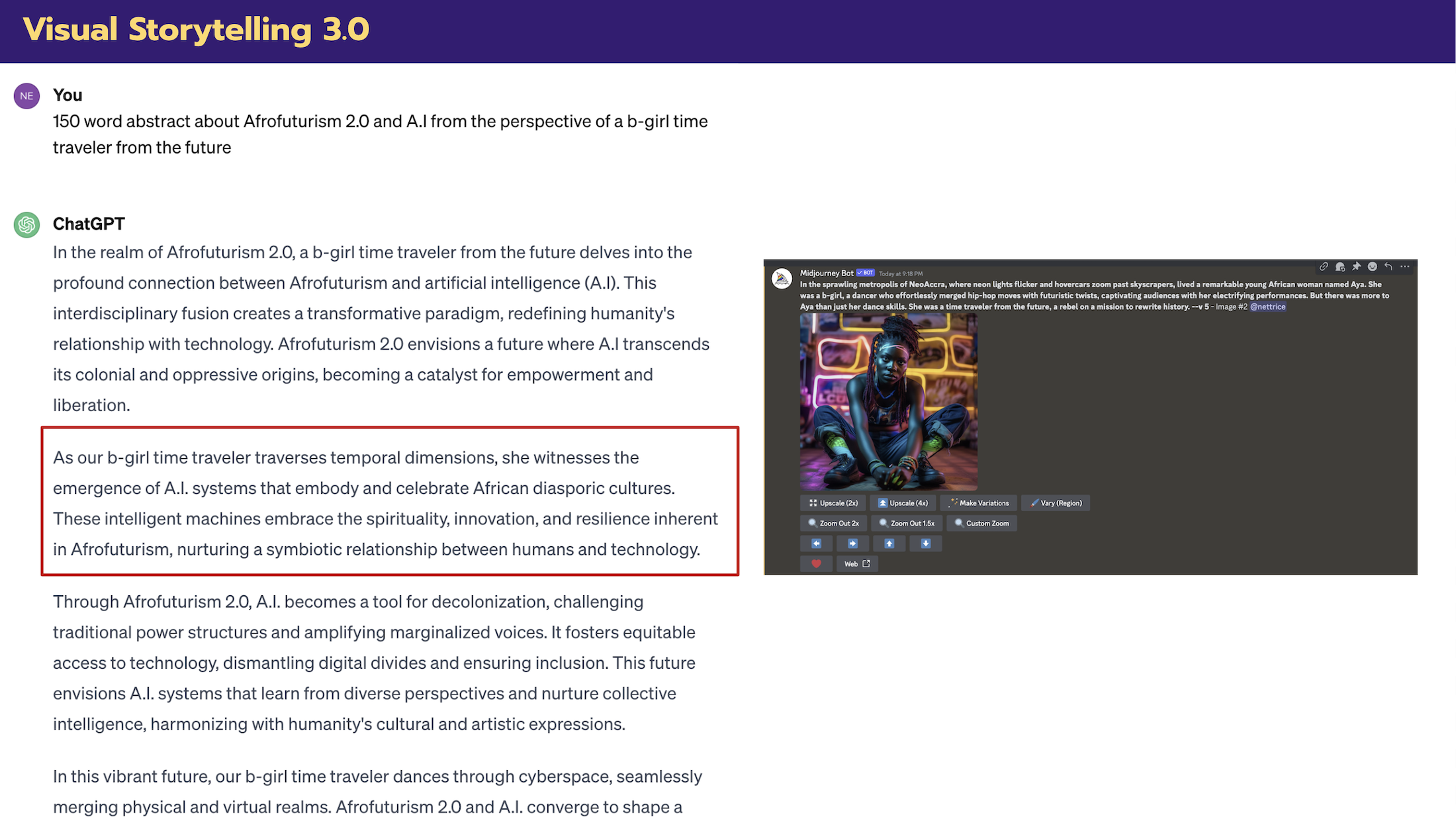

Visual Storytelling 3.0

Understanding language is very important when using generative AI tools. Students explored GenAI for visual storytelling, or the art of communicating messages, emotions, narratives and information in a way which reaches viewers at a deep and lasting level. Students learned how to write prompts in ChatGPT to create text for visual metaphors that helped them translate their ideas into more understandable forms. They also used Adobe Firefly and other image generation AI tools that are based on large language models or LLMs to help refine and steer their ideas towards desired results.

In order to generate compelling text and images students learned how to engineer prompts. Prompt engineering is a growing field that involves writing text that can be interpreted and understood by a generative AI model. Students learned how to add “boosters” or modifier words to their prompts to create more unique content. They also practiced this skill during prompt battles and when exploring different GenAI tools.

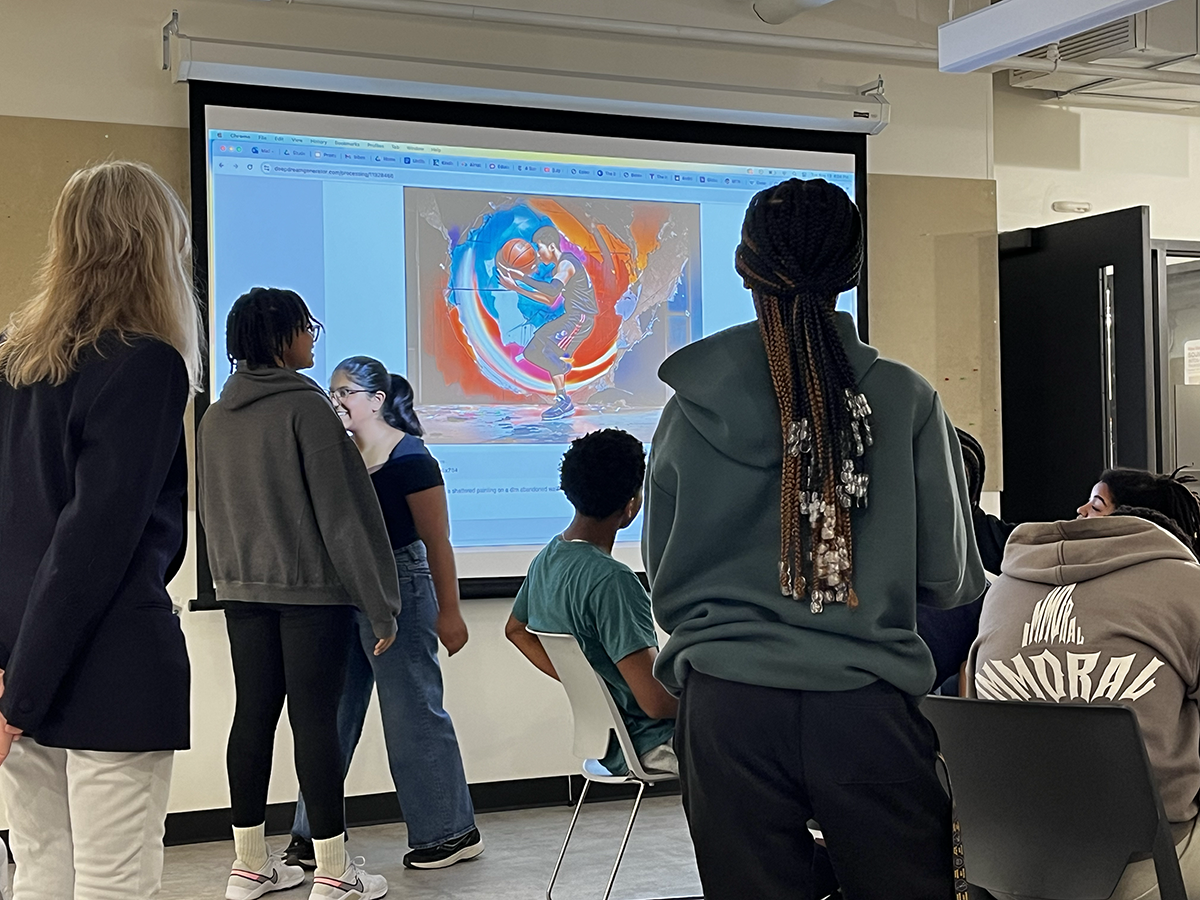

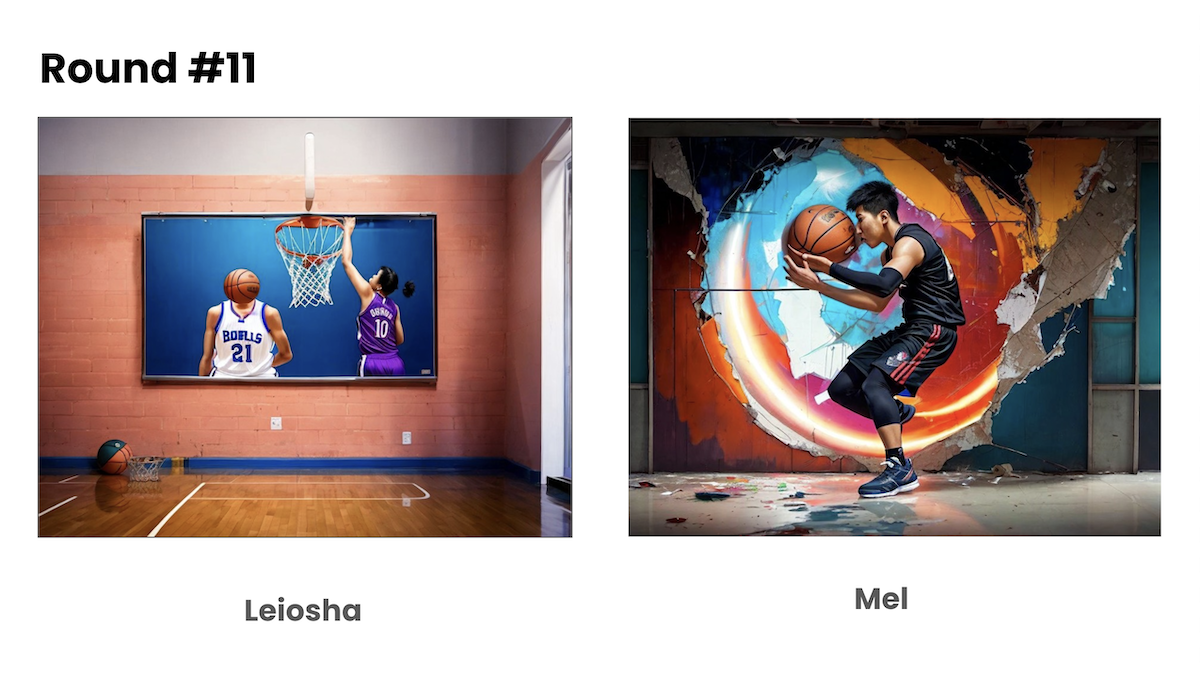

Prompt Battles

Many of the students were very interested in honing their prompt engineering skills, so we engaged them in prompt battles, or events in which people compete against each other using text-to-image GenAI tools to create images based on text prompts. Our events, which were very popular with the CYP youth, allowed the participants to show off their prompt skills, and the audience (peers) chose the winners from each head-to-head match. The person who won the most matches by the end of each battle won a prize (but everyone got something).

We held two prompt battles, one on Tuesday afternoon and another on Thursday afternoon. We used these events to measure how much progress the participants were making with their prompt engineering. We were looking for legibility, breadth, scope, and clarity in their written prompts. Students learned that, above and beyond the written prompts, the participants with the best modifiers or “boosters” often won their matches. People can’t simply rely on the GenAI tool alone.

Coding with ML5.js

Some of the CYP youth were very interested in coding beyond Scratch/face sensing, so we explored ml5.js, an open source library with a goal of making machine learning approachable for artists, creative coders, and students. The “ml” in ml5.js stands for machine learning, a subset of artificial intelligence, and “js” stands for Javascript, which is a programming language and core technology of the Web, alongside HTML and CSS. A key feature of ml5.js is its ability to run pre-trained machine learning models for web-based interaction. These models can classify images, identify body poses, recognize facial landmarks, hand positions, and more. Students were given an option to use these models as they were, or as a starting point for further learning along with ml5.js’s neural network module which enables training their own models.

In addition to learning how to code with mL5.js, students learned how p5.js, a JavaScript library for creative coding, is used to make interactive visuals in web browsers. They used the PoseNet Sketchbook and Carnival AI app to explore pose estimation or PoseNet, which estimates the 2D position or spatial location of human body key points from visuals such as images and videos. The Carnival AI app celebrates and engages movement and dance in and from Black and Caribbean communities and creates visual art. The youth could also use p5.js to code using PoseNet.

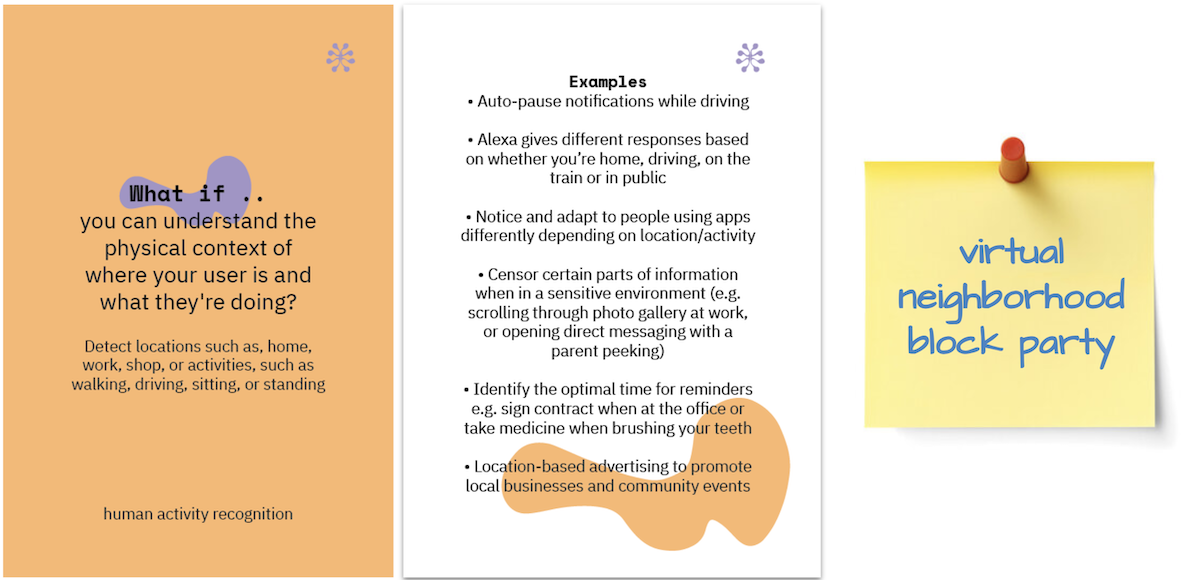

AI Design Thinking & Final Pitch Projects

To help students brainstorm their own GenAI projects we used AI Ideation Cards created with input from the AIxDesign community. These cards help designers and others leverage AI capabilities, including 7 categories with questions (prompts), definitions, and example use cases. After coming up with a topic, each student was instructed to select a category that best matched their idea. Students imagined AI capabilities as superpowers and thought about how GenAI could help solve their challenges in new, unique, or better ways. Students were given Post-It notes, worksheets, and index cards to work out their ideas. They also used Gen AI tools to visualize and plan their final projects.

Near the end of the week, students worked individually or in groups on their final pitches. Examples include healthcare: providing a comprehensive pain assessment that enhances diagnostic accuracy and personalized treatment plans; food: using personality quizzes and surveys to provide appropriate recipes; and music: giving everyone a chance to share their voices with the world using a high-quality recording tool. Students presented their project pitches on the last day.

Please Note: This work has been made possible through collaboration with Cambridge Youth Programs and Lesley University’s College of Art & Design’s PreCollege Program and the generous support of the STAR Initiative.

Above: a 5th grade coder mentors a 1st grader using Scratch Jr.

Above: a 5th grade coder mentors a 1st grader using Scratch Jr.